Docker is a commonly used tool in modern software development to deploy applications to web servers in a consistent, reproducible manner. It's been described as basically a "light-weight virtual machine" and it makes use of a Linux kernel feature known as containers.

How does it work?

In this post I'll try and briefly sum up what Linux containers do and what problem they solve for developers.

This blog post is non-technical: I won't be showing docker commands or any such details, there is plenty of better documentation on the Internet for that, but instead a high level overview of how we got here and what containers do for us today.

To understand Docker it may help first to understand Virtual Machines, which IMHO are a lot more accessible to the "common user."

A popular virtual machine program is VirtualBox. You can install VirtualBox onto your Windows PC (or your Macbook or your Linux desktop), and then, within VirtualBox you can "install" an alternative operating system -- without affecting your currently running system.

For example, under macOS you could install Windows 10 in VirtualBox. It would run inside an application window on your Mac desktop and you can run all your traditional Windows software as though it were its own separate PC. (Within reason -- 3D games don't always run well under virtualization).

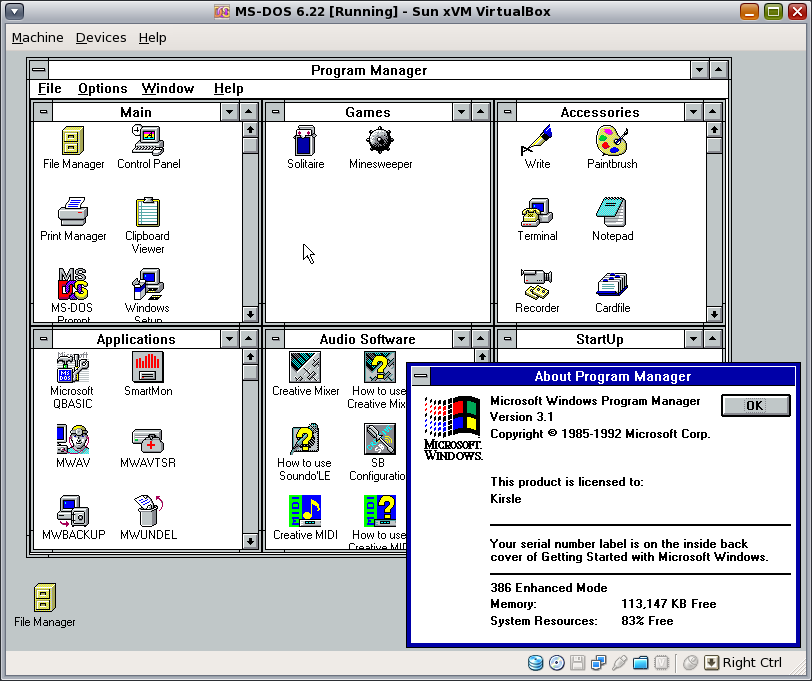

Here is a screenshot a while back of me running Windows 3.1 inside VirtualBox on my Linux desktop:

What virtual machines are doing is pretending to be a computer using software. When the "Guest OS" is looking at its hard disk drive, it's actually using a file sitting on the disk of your "Host OS." When the Guest OS looks for the Sound Card, or Graphics Card, or interacts with its Network Card -- these things don't physically exist. VirtualBox is emulating these devices.

A useful feature of a Virtual Machine is that you can install an operating system and some software, and get it all configured to run your application exactly as intended, and then just take its Virtual Hard Disk File and send it over to a different physical machine and run it over there. Since the Virtual Machine is a completely self-contained thing: it will run exactly the same way on the other machine.

Take for example the decentralized social network, Mastodon. It's an open source web app written in Ruby. You can host it on your own server, but the installation steps are very long: install Ruby, install a web server, configure things, lots of work.

If you were to install Mastodon inside a virtual machine with VirtualBox: you could set this up once and then take the entire virtual machine's disk image to another computer and run it there without repeating all these steps.

With a virtual machine you have full control over the operating system and how it's all configured, and when you get it working, it works "everywhere."

Virtual machines are heavy.

When you configure a virtual machine, an important setting is how much memory (RAM) you're going to give it. For example, Windows 10 runs comfortably when you give it 4 GB of memory at minimum. And when you give 4GB of RAM to a virtual machine, you're giving it exclusively to the virtual machine: your Host OS can not use that RAM any more. Otherwise, if Windows 10 needed the memory and it wasn't available things could go wrong: programs crashing, and you don't want that.

But chances are your Guest OS doesn't need all 4 GB of that memory all the time, and so it's wasteful to reserve so much memory for it. And if you need to run multiple virtual machines -- your web app, your database server, etc. -- needing to reserve large swaths of resources for each VM limits how many you can run on a single physical machine.

We can fast forward a few decades and come straight to Docker now.

Compared to full fat virtual machines full of emulated hardware and dedicated resources, Docker containers run very light:

So what does this all mean?

Suppose you have a physical computer that's running Fedora Linux. Inside of a Docker container, you install Ubuntu, which is another "flavor" of Linux.

Fedora and Ubuntu differ in numerous ways: they use a different kind of "package manager" to install and update software, they use slightly different names for the software they install, and so on.

Under Docker, the Fedora kernel of the Host OS is shared by the Ubuntu container. They're both Linux, so this is fine.

However, when the Ubuntu container looks at

its file system, and looks at the software installed on it, it sees an Ubuntu

system: there are files like /etc/ubuntu_version and commands like apt and

dpkg are available. The Ubuntu guest does NOT see the Fedora host's file

system: it doesn't know what software Fedora has installed, it can't see the

users that exist in the Fedora system and so on.

When you run an application from a Docker container, there is virtually no overhead compared to just running the application without Docker.

Take the Mastodon example from earlier: you could manually install it on your Linux server and run the Mastodon software, and it only requires the memory and CPU usage that the application itself uses -- just like any other software.

If you ran Mastodon inside a fat virtual machine like VirtualBox, you need to dedicate a relatively large chunk of RAM to running it, because the virtual machine itself needs enough RAM for its whole operating system to run comfortably.

With Docker, your host Linux kernel runs the Mastodon program the same as you just running it directly. However, when Mastodon looks at its file system or its network, it sees the "virtual" file system and a limited (firewalled) network connection. No dedicated resources are needed for this, no emulated hardware.

And so you can run many Docker containers on a single host machine without needing a ton of extra RAM to devote to each one. It's not uncommon to have 20, 30 or 50 Docker apps running together -- each one isolated from the next, each with its own file system and no knowledge of the host or of the existence of its Docker neighbors. Good luck running 50 fat virtual machines on one system together -- unless you have like 192 GB of system RAM to throw around!

Why do all of this anyway?

To simplify deployment of applications.

At work I build Python web applications. To install these from scratch on a new system, I need to install Python, install a bunch of dependencies, download my app from GitHub, maybe run a few steps to build front-end code that goes with my app, and then run the app itself. I also need a web server that forwards requests to my app. It's a lot of steps, not unlike the steps needed to install Mastodon.

In a business environment, you often need multiple servers that you deploy your app to: one for development, where you can deploy potentially-broken code and test it; one for staging, where you can deploy "to production" but with a safety net in case it blows up (you don't want your production website to go down!), and finally a production server that "real users" are interacting with.

If you need to manually set up each and every server, this leaves lots of room for human error. If a new feature of your app brings in a new dependancy, and you forgot to install it on production, bam! Your app goes down.

Wouldn't it be nice if you could deploy to staging, test everything there, and when you sign-off on it, you can deploy to production and just know it's going to work exactly as well as it did on staging?

This is what Docker solves.

A brief history of how web developers have deployed their applications:

We've been talking about Mastodon this whole post, and they have a Dockerfile too so you can see what it looks like. It starts from an Ubuntu base and runs a series of commands to install and configure it on Ubuntu and it produces a container ready to run or deploy to your servers. Your server need not run Ubuntu itself: it could be Fedora or Debian or any other, and it doesn't care, the Docker container describes a fully self-contained Ubuntu system guaranteed to run the application regardless of the host's configuration.

There are 3 comments on this page. Add yours.

nice

Soo, how do you get docker? How would you go about running a VM with all your system resources? Is there any simple way to do this? I'm on Manjaro (Arch) Linux by the way

Soo, how do you get docker? How would you go about running a VM with all your system resources? Is there any simple way to do this? I'm on Manjaro (Arch) Linux by the way

Docker is usually shipped by your Linux distribution maintainers. On Fedora you can dnf install docker, on Ubuntu or Debian, apt install docker. I'm not familiar with Manjaro but it's probably available in your package manager. Next steps typically include:

sudo systemctl enable docker && sudo systemctl start docker on systemd distros.docker group so you don't need to sudo docker all the time: sudo usermod -aG docker <your_username>sudo docker run hello-world to test it's all working.And work through some tutorials! It's a fair bit to learn for a beginner. You can also look at some example Dockerfiles to see how people use it: most open source web apps have Dockerfiles (Wordpress, Nextcloud, Gitea, etc.), PostgreSQL or MySQL, just google <insert product here> Dockerfile

Vagrant works similarly to Docker but it produces a virtual machine image, like for VirtualBox or VMware. Last I checked it can also deploy your OS to various cloud providers like Amazon AWS or DigitalOcean (particularly to Virtual Private Servers).

0.0331s.